Regularization

Feb 13, 2025

Review of last lecture

On Tuesday, we learned about robust regression.

Heteroskedasticity

Heavy-tailed distributions

Median regression

These were all models for the observed data

Today, we will focus on prior specifications for

Sparsity in regression problems

Supervised learning can be cast as the problem of estimating a set of coefficients

This is a central focus of statistics and machine learning.

Challenges arise in “large-

Finding a sparse solution, where some

Bayesian sparse estimation

From a Bayesian-learning perspective, there are two main sparse-estimation alternatives: discrete mixtures and shrinkage priors.

Discrete mixtures have been very popular, with the spike-and-slab prior being the gold standard.

- Easy to force

- Easy to force

Shrinkage priors force

- In recent years, shrinkage priors have become dominant in Bayesian sparsity priors.

Global-local shrinkage

Let’s assume

Sparsity can be induced into

The degree of sparsity depends on the choice of

Spike-and-slab prior

- Discrete parameter specification,

The number of zeros is dictated by

Discrete parameters can not be specified in Stan!

Spike-and-slab prior

- Spike-and-slab can be written generally as a two-component mixture of Gaussians,

Often

Ridge regression

Ridge regression is motivated by extending linear regression to the setting where:

there are too many predictors (sparsity is desired) and/or,

The OLS estimate becomes unstable:

Ridge regression

The ridge estimator minimizes the penalized sum of squares,

- Adding the

- Adding the

Bayesian ridge prior

Ridge regression can be obtained using the following global-local shrinkage prior,

This is equivalent to:

How is this equivalent to ridge regression?

Bayesian ridge prior

- The negative log-posterior is proportional to,

The posterior mean and mode are

Since

Bayesian statistics is inherently performing regularization!

Lasso regression

The least absolute shrinkage and selection operator (lasso) estimator minimizes the penalized sum of squares,

Lasso is desirable because it can set some

Bayesian lasso prior

Lasso regression can be obtained using the following global-local shrinkage prior,

This is equivalent to:

How is this equivalent to lasso regression?

Bayesian lasso prior

- The negative log-posterior is proportional to,

Lasso is recovered by specifying:

The posterior mode is

As

Bayesian lasso does not work

There is a consensus that the Bayesian lasso does not work well.

It does not yield

The gold-standard sparsity-inducing prior in Bayesian statistics is the horseshoe prior.

Relevance vector machine

Before we get to the horseshoe, one more global-local prior, called the relevance vector machine.

This model can be obtained using the following prior,

- This is equivalent to:

Horseshoe prior

The horseshoe prior is specified as,

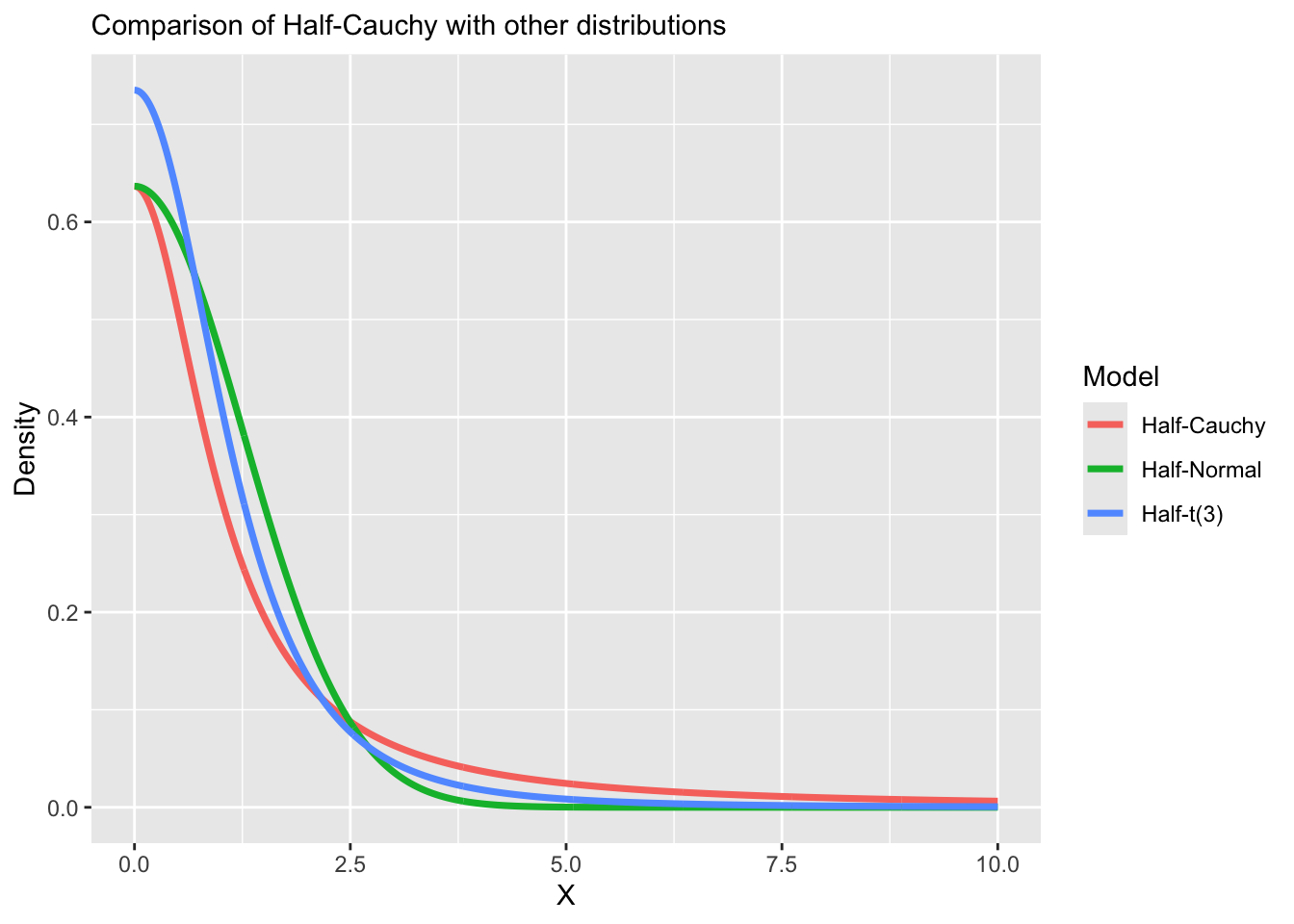

Half-Cauchy distribution

A random variable

- The Half-Cauchy distribution with

Half-Cauchy distribution in Stan

In Stan, the half-Cauchy distribution can be specified by putting a constraint on the parameter definition.

Half-Cauchy distribution

Horseshoe prior

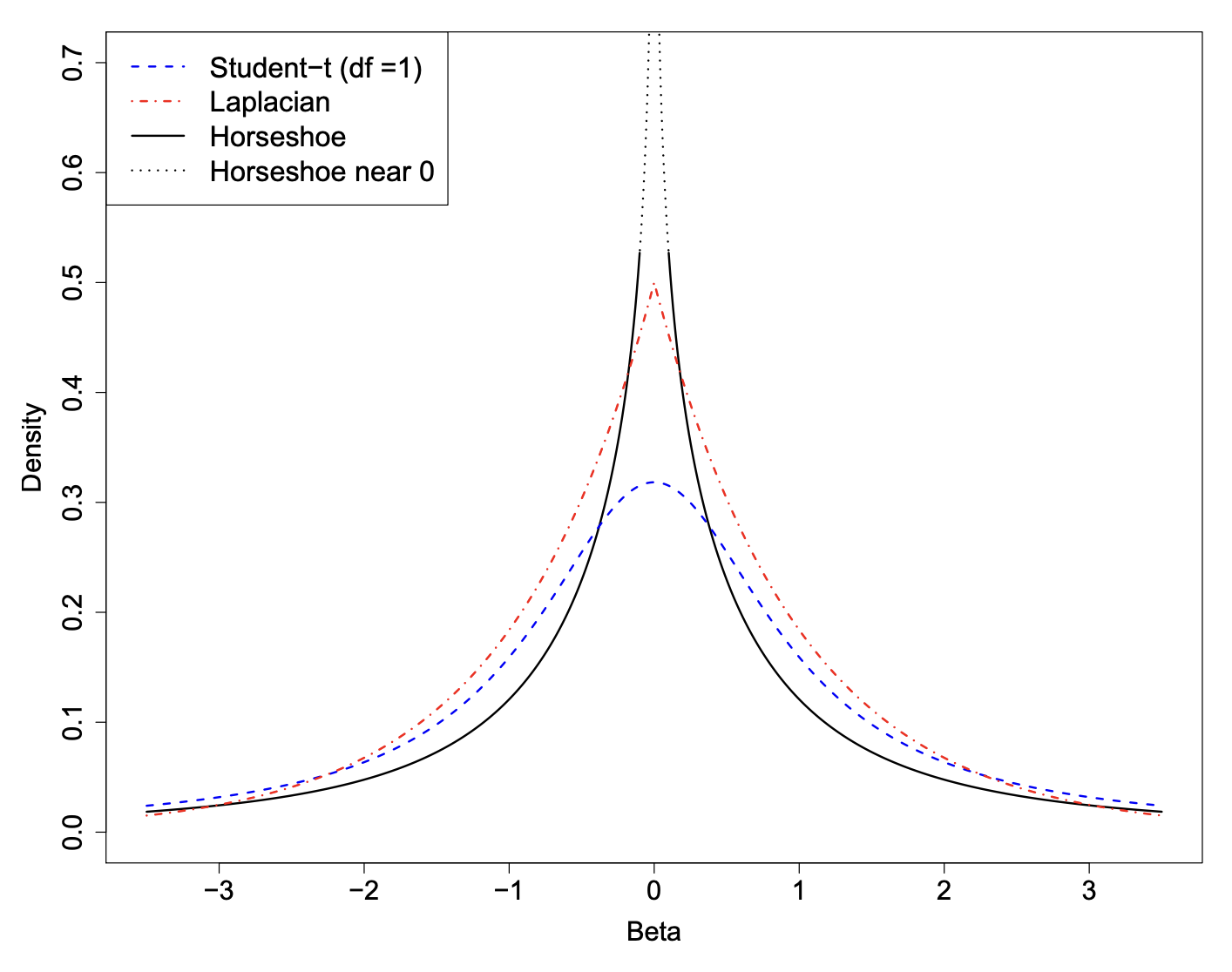

The horseshoe prior has two interesting features that make it particularly useful as a shrinkage prior for sparse problems.

It has flat, Cauchy-like tails that allow strong signals to remain large (that is, un-shrunk) a posteriori.

It has an infinitely tall spike at the origin that provides severe shrinkage for the zero elements of

As we will see, these are key elements that make the horseshoe an attractive choice for handling sparse vectors.

Relation to other shrinkage priors

Horsehoe density

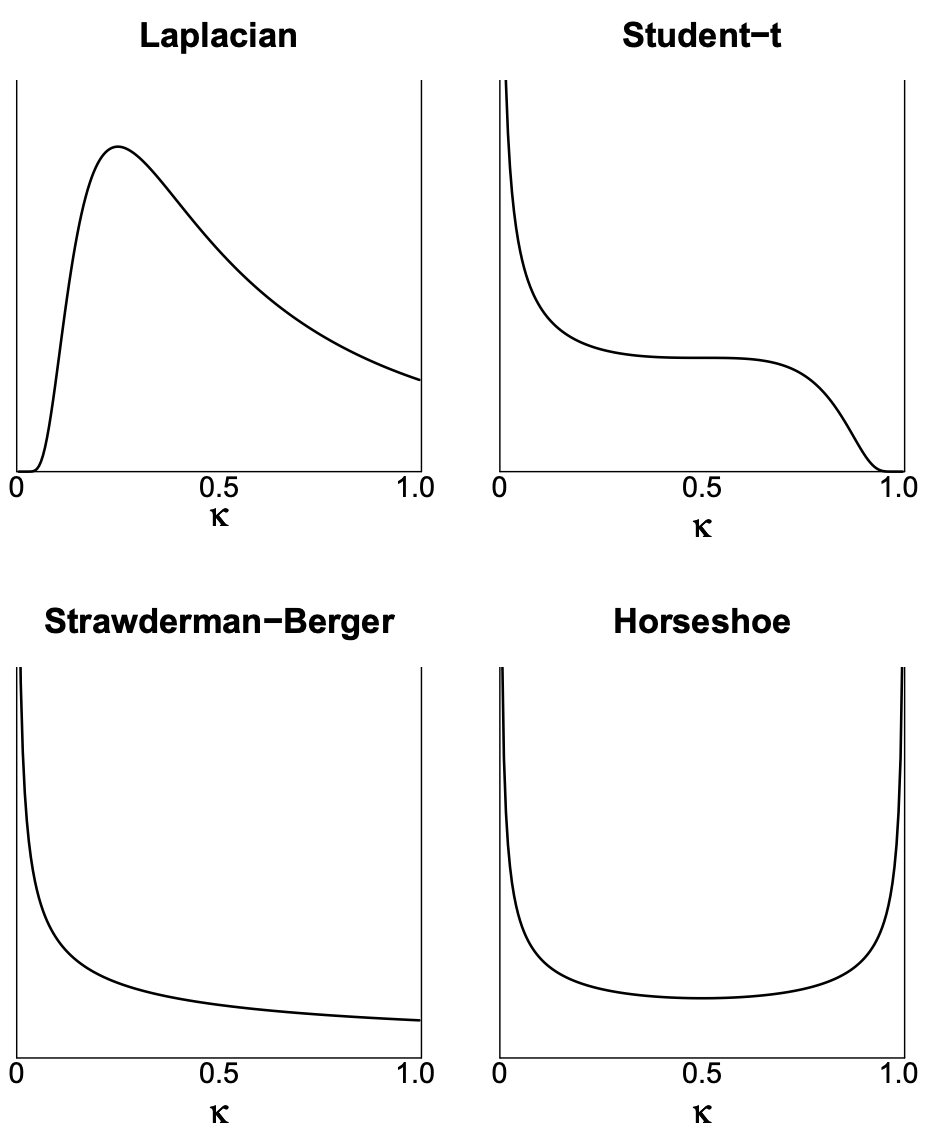

Shrinkage of each prior

Define the posterior mean of

The following relationship holds:

Standardization of predictors

In regularization problems, predictors are standardized (to mean zero and standard deviation one).

This means that so that

Shrinkage parameter:

Shrinkage parameter

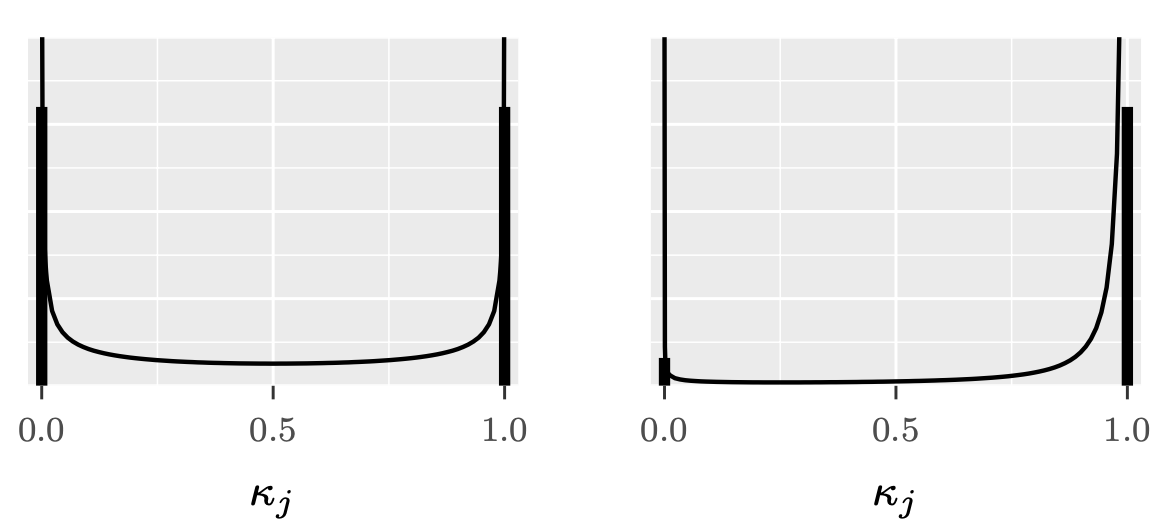

Horseshoe shrinkage parameter

Choosing

This horseshoe-shaped shrinkage profile expects to see two things a priori:

Strong signals (

Zeros (

Similarity to spike-and-slab

A horseshoe prior can be considered as a continuous approximation to the spike-and-slab prior.

The spike-and-slab places a discrete probability mass at exactly zero (the “spike”) and a separate distribution around non-zero values (the “slab”).

The horseshoe prior smoothly approximates this behavior with a very concentrated distribution near zero.

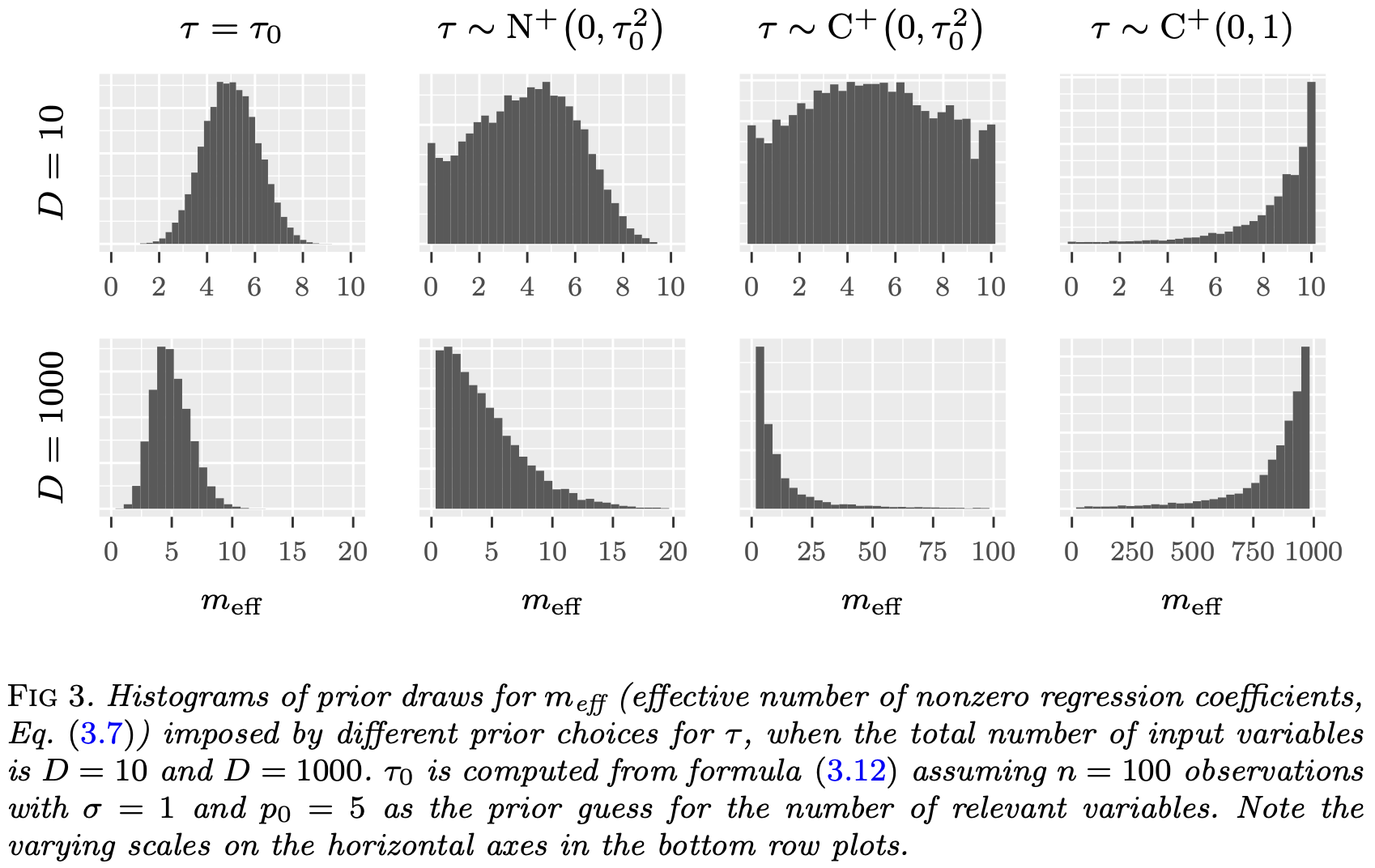

Choosing a prior for

Carvalho et al. 2009 suggest

Polson and Scott 2011 recommend

Another prior comes from a quantity called the effective number of nonzero coefficients,

Global shrinkage parameter

- The prior mean can be shown to be,

- Setting

Global shrinkage parameter

Non-Gaussian observation models

- The reference value:

This framework can be applied to non-Gaussian observation data models using plug-in estimates values for

Gaussian approximations to the likelihood.

For example: For logistic regression

Coding up the model in Stan

Horseshoe model has the following form,

Efficient parameter transformation,

Horseshoe in Stan

data {

int<lower = 1> n;

int<lower = 1> p;

vector[n] Y;

matrix[n, p] X;

real<lower = 0> tau0;

}

parameters {

real alpha;

real<lower = 0> sigma;

vector[p] z;

vector<lower = 0>[p] lambda;

real<lower = 0> tau;

}

transformed parameters {

vector[p] beta;

beta = tau * lambda .* z;

}

model {

// likelihood

target += normal_lpdf(Y | alpha + X * beta, sigma);

// population parameters

target += normal_lpdf(alpha | 0, 3);

target += normal_lpdf(sigma | 0, 3);

// horseshoe prior

target += std_normal_lpdf(z);

target += cauchy_lpdf(lambda | 0, 1);

target += cauchy_lpdf(tau | 0, tau0);

}Prepare for next class

Work on HW 03, which was just assigned.

Complete reading to prepare for next Tuesday’s lecture

Tuesday’s lecture: Classification